One of the most common data file formats I have to deal with is CSV files. Assuming your file is well formed (i.e. starts in the top row, with or without a header, in a nice continuous table), then there is no difficulty. Drag the file into Alteryx’s designer and it will create an Input tool all configured automatically. For example, a CSV file like

It will search for files as well if you put in a wildcard within the filename (e.g. *.csv). It can be set to add the filename where the row came from to the dataset. It will only read the header from the first entry, so this is only useful if all files are in identical format. If the headers don’t all match the initial one, the input tool will report a warning to the output. If the number of columns don’t match then the Input tool will skip the file, again with a warning.

One small thing to note, is that it doesn’t parse the inputs. All input data is left as text and you need to use a combination of select and formula tools to convert into the true data types you need. For the format above, just one select tool is sufficient to cast the types, but you will need to use the formula tool in harder cases.

Dynamic Filenames

At first, I couldn’t figure out how to make Alteryx pick up a file with a specific but variable date in the filename, without hard coding it in the tool. Most of the files our systems generate are prefixed with the date. For example, ‘2015-03-13 Prices.csv’. While this isn’t possible within the Input Tool, there is a Dynamic Input tool, which allows you to adjust the filename based on another part of the flow.

The first part of the flow is to generate the required date. There is a DateTime Now tool built into Alteryx which returns the current date (or time) as a string in the specified format (I tend to use yyyy-MM-dd format as it easy to work with and unambiguous). Most of the time I am looking for a file for the previous business day. As most of the Date Time functions work with a Date value, first I needed cast the output to a date and then use formulae to generate the required date in the format needed. As the formula tool allows you to reference previous steps of the calculation, it is possible to parse the text, create the required date and then format the output in one tool. The table below is the 3 steps I used to create the previous date (note you need %Y in the DateTimeFormat to generate 4 digit years).

| Date | Date Value | DateTimeParse([DateTimeNow],”%y-%m-%d”) |

| Prev | Prev Business Day | DateTimeAdd([Date], -Switch(DateTimeFormat([Date],”%a”),1,”Sun”,2,”Mon”,4) , “days”) |

| PrevTxt | Prev in yyyy-MM-dd | DateTimeFormat([Prev],”%Y-%m-%d”) |

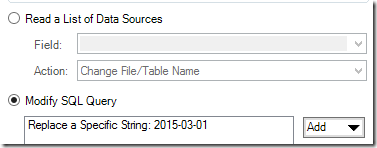

After that you need to pick a sample file for the dynamic input tool. This will set up the format settings in a modal dialog much like the Input tool. The properties box then allows you to replace all or part of the filename from an incoming connection. To replace all of the filename use the ‘Read a List of Data Sources’ and select action ‘Change File/Table Name’. To replace part of the filename then select Modify SQL Query.

Click Add then select ‘Replace a Specific String’ and then select the date part of the sample file name and enter the field of the date (‘PrevTxt’ in my case):

This creates a flow which can be run each day and will extract the data from the previous day data files. As with the input tool, you can use a wildcard in the filename and include the filename in the output as a field.

The dynamic input tool can also take a list of file names from the directory tool, or be used to create dynamic SQL queries. Combined with macros, I have found this a great way to create some components allowing me to access all of my company’s internal database tables and files very quickly and easily.

Nasty Formats

If you have flat files with headers or footers, or with lines to ignore within the data set then you need to take more control over the parsing process. In order to do this, I wanted to read in the file as a single blob. While I expect this is possible with the standard Input Tool, I found this much easier to achieve with the Download Tool. As an example of this problem, I looked at dealing with a file which contains a couple of header rows like this:

The one complication with the download tool is that it takes a URI not a filename. It does however support the file:/// URI syntax. You can hence convert a filename to a URI using a formula tool. I do not believe you can use the file:// syntax for UNC resources (you can read details on File URIs here). The formula I use is:

iif(FindString([FileName],”://”)!=-1,[FileName],”file:///”+Replace([FileName], “\”, “/”))

This will not convert URIs and so I can feed in either to the download tool. You do not need to encode the filenames as the download tool will do this by default. Though if you are mixing and matching URIs and filenames you will need to ensure that the URIs are not encoded as well.

I tried the following for working with UNC paths as well, but got errors from the download tool (shown on right):

iif(FindString([FileName],”://”)!=-1,[FileName],

iif(Left([FileName],2)=”\\”, “file:”, “file:///”)

+ Replace([FileName], ‘\’, “/”))

I can then use a download tool to read in the file. It doesn’t parse the contents of the file at all and just brings the contents into a column called ‘Download Data’. The contents of the file will be in the field value as a string unless there is a problem reading the file then the error will be similar to the one above. For http and ftp requests, the DownloadHeaders will allow you to see if there was a problem.

The next task is to split the string into rows. Use a select tool to reduce the columns back to the original FileName and the Download data, then use the Text To Column tool. If you put the delimiter in as ‘\n’ then it will split on new lines (it appears to be agnostic to UNIX or Windows style line endings). You can specify that you want to split the output to new lines. Likewise if you need to handle text within quotation marks this is available within the Advanced options. The configuration looks like this:

The next task is to split the string into rows. Use a select tool to reduce the columns back to the original FileName and the Download data, then use the Text To Column tool. If you put the delimiter in as ‘\n’ then it will split on new lines (it appears to be agnostic to UNIX or Windows style line endings). You can specify that you want to split the output to new lines. Likewise if you need to handle text within quotation marks this is available within the Advanced options. The configuration looks like this:

Following that, it is easy to filter out the rows of the data I don’t need. You can then use either Filter tool to remove the rows using a conditional statement or the Sample Tool to skip the a number of rows at the beginning or end of the file as needed. If you use the sample tool, you can select grouping to be ‘FileName’ so that it skips lines in each file.

In order to make processing easier, I find it best to have a row number in the data set. There is a Multi-Row Formula tool which allows you to create a row number. The expression needs to restart the counter every time the filename changes so something like ‘IIF([Row-1:FileName]=[FileName],[Row-1:_Row],0)+1’ should do.

After that we need to break each row into it’s columns. Again the Text To Column tool can do this. Assuming you have a fixed number of columns you can ‘Split to Columns’, however I find it easier to split to rows as this creates a data flow which is flexible to different file layouts. Configuration is pretty much identical to the case above but the delimiter is ‘,’ this time. I then add another multi-row formula to create a column number, this time it reset every time row or filename changes. At this point the data looks like:

The last task to create the raw data set is to join the headers back onto the data rows. Using a filter block to separate the data based on whether Row = 1 or not and then join the two sets on FileName and Column. A little re-labelling of the inputs and selection of fields allow us to create a row based version of the CSV file.

The final task would be to shape the data into the form needed. In this case it is keyed by Date, so using a similar flow as to how the field was applied to replace _Row with Date and then use a Cross Tab tool to pivot the data to a column based table:

The completed workflow looks like:

You can download this workflow here:

Nice work James. Please keep the Alteryx blogs coming!

LikeLike

Thank you for this useful walk-through.

I got stuck at this step:

“I then add another multi-row formula to create a column number, this time it reset every time row or filename changes.”

My result isn’t turning out correctly, because I’ve probably got that Multi-Row Formula tool expression wrong.

I’m not sure how to check whether the “row OR the filename changes.” The second time you use the Multi-Row Formula tool to create a column number, how does the tool expression differ from the first time you use it to create row numbers?

LikeLike

Ref. my previous comment.

Just managed to solve it, after banging my head for a while.

What threw me off was the ternary-like function IFF().

Here is the working expression I used for the second Multi-Row Formula tool:

IF [Row-1:FileName]=[FileName] AND [Row-1:_Row]=[_Row] THEN [Row-1:_Column]+1 ELSE 1 ENDIF

In case anyone else stumbles upon the same issue in the future.

LikeLike

Glad you solved it.

You can also do it using the grouping options within the Multi Row formula so that it resets every time the file name or row changes.

James

LikeLike